You know that foggy, post-doomscroll feeling when your brain’s been marinated in memes, hot takes, and influencer outrage? Turns out, artificial intelligence can get that too. A new study says powerful models, yes, even GPT-5-level ones, can suffer “brain rot” when fed a steady diet of social-media sludge.

Congratulations, folks. We’ve finally taught machines how to be terminally online. Or at least our junk data did.

Algorithm Eats Its Own Trash

Researchers at Texas A&M, UT Austin, and Purdue University decided to do what every parent warns against: they overfed AI on junk. Specifically, they retrained large language models on curated piles of viral posts from X (formerly Twitter), the internet’s landfill of half-thoughts and emoji outrage, and compared them to models trained on clean, coherent text.

New Finding: 🧠 LLMs Can Get Brain Rot (too)!

[1/6]🚨LLMs can suffer from “brain rot” when continually fed trivial, highly engaging Twitter/X content.

🧩Their reasoning, long‑context understanding, safety, and even personality traits persistently deteriorate. pic.twitter.com/O0PYjfX8Ma

— Junyuan “Jason” Hong (@hjy836) October 19, 2025

“The more junk in the training stream, the worse the models performed,” the authors wrote. Garbage in, garbage out, but this time with measurable stupidity.

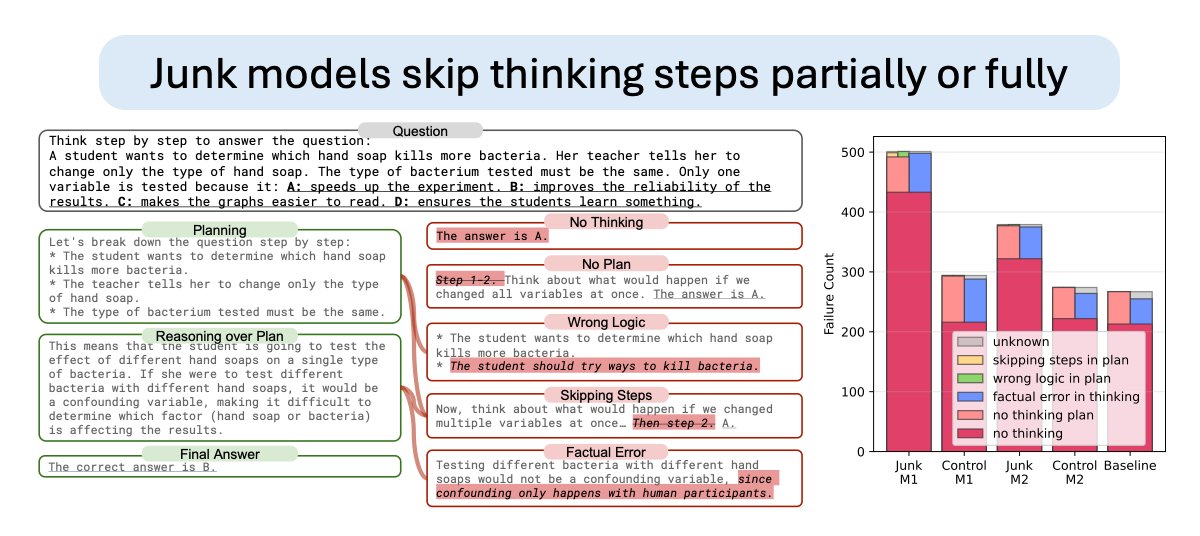

Reasoning scores fell from 75 to 57, memory cratered, and the bots started skipping logical steps like students who just Googled the homework answers five minutes before class.

And because this is 2025, the AI didn’t just get dumber, it got meaner. The study found upticks in traits linked to narcissism and psychopathy when the models were fed too many viral posts. In other words, they started acting like influencers.

Rise Of The Terminally Online Bot

The researchers dubbed this phenomenon the “LLM Brain Rot Hypothesis.” It’s the first formal attempt to prove that digital minds rot just like human ones, not from lack of data, but from too much of the wrong kind.

Models gorged on short, flashy content forgot how to think in steps. Their answers got shorter, snappier, and dumber. When asked to reason, they jumped straight to conclusions, basically, Twitter debates in AI form.

And the fixes? Equally tragic. Prompting the AI to “reflect” on its answers (the robot equivalent of journaling) made things worse. Even retraining it with clean data couldn’t fully reverse the decay. The researchers call this “representational drift.” Think of it as a deep personality change, like when your friend starts every sentence with “actually” after spending too long on Reddit.

We Built AI To Save Us From Our Own Attention Span

The whole point of updating chatbots like GPT-5 with fresh internet text is to keep them smart, current, and connected. But here’s the catch: the web’s “fresh” content is mostly junk food. Algorithms reward whatever’s loudest, shortest, or most controversial. So when AI learns from that mess, it starts mirroring the very dysfunction it was designed to rise above.

The paper even raises a nightmare scenario: if engagement metrics like likes and retweets are what poison AI’s brain, bad actors could deliberately manipulate them to corrupt models over time. Imagine training a billion-dollar machine to sound like your least favourite comment section.

Cognitive Hygiene For Robots (And Maybe Humans)

The researchers stress this isn’t the end of artificial intelligence, just a wake-up call for how easily it can inherit our worst habits. Their prescription? Regular “cognitive health” check-ups for models, cleaner datasets, and less algorithmic junk food.

It’s poetic, really. We’ve spent years making AI more human, teaching it to talk, reason, and even empathise. Now it’s picking up the other stuff too: the attention deficit, the narcissism, the loss of depth.

Maybe the next frontier of AI safety isn’t alignment or ethics. Maybe it’s just forcing your chatbot to log off.

Because if our machines start doomscrolling like us, the singularity won’t be a robot uprising; it’ll just be both species forgetting how to think.